The Hydrino Hypothesis Chapter 11

An Introduction To The Grand Unified Theory Of Classical Physics

This monograph is an introduction to Randell L. Mills’ Grand Unified Theory of Classical Physics, Hydrino science, and the efforts of the company Brilliant Light Power (BLP) to commercialize Hydrino-based power technology, as told by Professor Jonathan Phillips. Out of necessity, it assumes a degree of familiarity with physics and physics history. An overview of the BLP story which serves as a helpful introductory piece to those unfamiliar with its sweeping scope can be found here. Readers should also read the previous chapters of this monograph prior to this one:

Chapter 1 of The Hydrino Hypothesis

Chapter 2 of The Hydrino Hypothesis

Chapter 3 of The Hydrino Hypothesis

Chapter 4 of The Hydrino Hypothesis

Chapter 5 of The Hydrino Hypothesis

Chapter 6 of The Hydrino Hypothesis

Chapter 7 of The Hydrino Hypothesis

Chapter 8 of The Hydrino Hypothesis

Chapter 9 of The Hydrino Hypothesis

Chapter 10 of The Hydrino Hypothesis

By Professor Jonathan Phillips

Is Dark Matter Hydrino?

This chapter is the fourth chapter in which the Hydrino Hypothesis is shown to agree with all verified data. This chapter shows that the GUTCP postulate that dark matter is Hydrino is consistent with all data and hence remains a valid scientific theory. This is contrasted with the many metaphysical models postulated by the general astrophysics community. It is demonstrated that none of these models is a validated scientific theory.

Introduction

The last topic to be covered in detail in this monograph is the simple GUTCP explanation for observations that led scientists to postulate that most of the mass of the universe is mysterious “dark matter.”

To wit: Hydrinos are dark matter.

This topic brings together most of the elements addressed in earlier chapters, including the proper scientific process and scientific philosophy and the outcome of tests described in detail in earlier chapters which are re-purposed/re-interpreted as tests of the GUTCP dark matter hypothesis.

Indeed, Balmer line broadening, EUV spectroscopy, and even calorimetry data are all quantitatively consistent with the Hydrino as dark matter hypothesis. And most remarkable: spectroscopic data in the Extreme Ultra Violet (EUV) data collected from cosmic bodies is quantitatively consistent with predictions of the GUTCP dark matter model.

Once again, it proves valuable to contrast two modelling approaches. In one corner is the GUTCP theory of dark matter. This model is integrated and consistent with all the other GUTCP models, particularly the quantum model and Hydrinos, hence with classical physics, pre-1871, as well. In the other corner is the physics and astrophysics community which has thrown out a bewildering array of theories on the nature of dark matter. All the astrophysics community theories, it should be noted, are not in any fashion correlated to SQM or any other quantum theory. These are “stand-alone” models.

In those cases in which some experimental tests are conducted to test predictions of favored models, favored by those with sway in the astrophysics community, the outcome is a complete failure to support the hypotheses.

Outcomes predicted by these theories are never observed.

Scientific logic indicates these theories are thus debunked. Another class of dark matter theories are simply untestable. These are theories that are metaphysics/untestable as defined previously. Another class of theories, not favored by those with authority, are those simply ignored. Not surprisingly, the GUTCP theory of dark matter is in this category. Yet, all data collected is quantitatively with the GUTCP dark matter hypothesis: dark matter is Hydrino.

Dark Matter

One of the great unsolved observations in cosmology (cosmetology?) is the absence of sufficient matter to account for the stability of the Milky Way and other galaxies.1 For example, given the velocity of the stars in the arms of the Milky Way, and the net mass of the “lit” (visible) part of the galaxy there is an imbalance to the classic force balance:

Unless the centripetal force represented by the left side of the equation equals the gravitational force pulling the mass toward the center, the value of “R”, the radius, will adjust. Only if there is perfect balance will the system be stable.

Example: spin a ball around you on a string. The pull on the string, that is the force it exerts on the ball, is a function of how fast it is spinning around you and how far it is from you. The faster you spin the more force will be required for you to hold the ball at the same radius. That is, to achieve a constant radius, force and velocity must adjust. If the inward force is larger than the centripetal force, the value of R will decrease, and velocity will increase. If the string too weak, it will break and the ball will simply fly off in a straight line.

In a similar fashion, there is strong observational evidence that the gravitational mass of the visible matter of the Milky Way is not enough to create a force able to hold the stars in orbit around the galactic center. Observation of lit matter shows insufficient mass. The stars, according to conventional models of gravity, should simply shoot out and away from the galactic center. They don’t.

The simple solution posited by the physics community for the conundrum of “too little visible mass” to hold the galaxy together is the dark matter hypothesis: there is a type of matter that is invisible which provides the missing gravitational force holding the galaxy together. The name given this invisible mass is dark matter.

The physics community has interpreted the lack of visibility of this material to a lack of interaction with light; they cannot detect it except for its gravitational influence. That is, it is generally accepted that dark matter neither emits nor reflects light. The use of the term “generally accepted” is employed because some models of dark matter postulate it is in the form of massive objects composed of ordinary matter, like brown dwarf stars. Yes, the mystery of dark matter is so complete that all sorts of theories, except of course the GUTCP and the HH, are permitted in establishment physics circles.

The primary observation inconsistent with the amount of matter is the velocity distribution of stars. Specifically, it is clear that the velocity of stars at the outer reaches of our own galaxy, as well as virtually all other galaxies studied, is far faster than anticipated.

Why an anticipated velocity? A good example of “proper”, and anticipated, velocity behavior regards the observed velocities of the planets of the solar system. The further a planet is from the sun, the slower it moves, and the longer it takes to complete a full revolution of the sun. This is old stuff. It is actually part of Kepler’s Laws of planetary motion. The radial velocity distribution for planets in the solar system clearly varies depending on a planet’s distance from the sun.

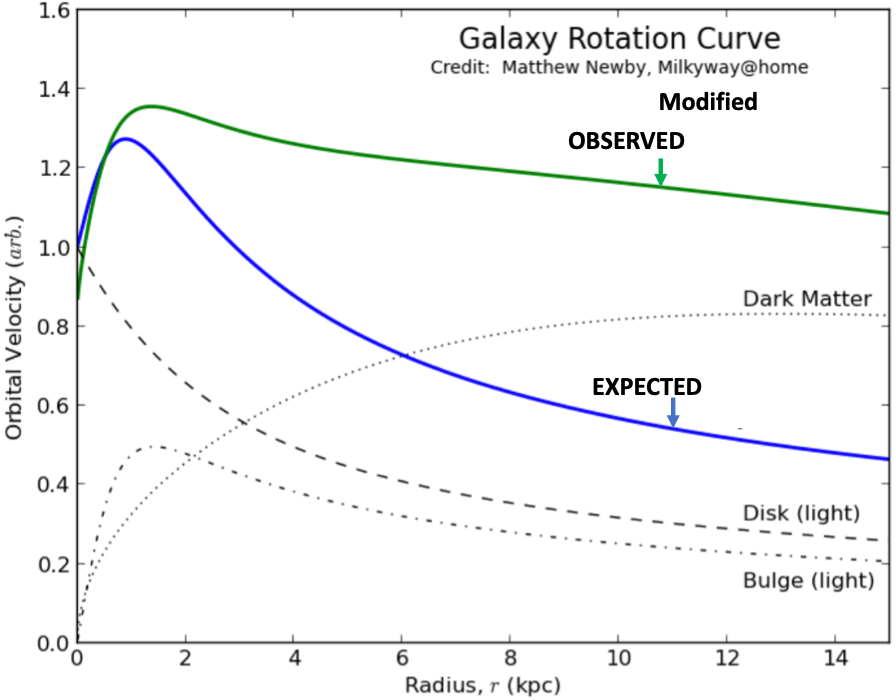

In contrast, the rate of stellar rotation in our galaxy and others, as well as in galaxy clusters, is called “flat” because the fall off of the rotation rate/absolute velocity with increasing distance from the center is much less than anticipated (Figure 11-1).

The flat rotation rate is attributed to the gravitational effects of dark matter, in this case not the amount of dark matter, but the necessary distribution of dark matter. This observation suggests that there must be a great deal of “dark matter,” and furthermore, density of this material must follow a particular distribution. That is, the velocity profiles observed can only be explained if dark matter density increases with distance from the galactic center (dotted curve, Figure 11-1). It is generally understood that dark matter must form a halo, probably larger than the visible component of the galaxy, outside the main lit stellar part.

Figure 11-1: Flat Velocity Curves for Galaxies- As shown (Green) the observed velocity of visible stars in galaxies does not drop with distance from the center as anticipated (Blue). This suggests (dotted curve) that most dark matter is at the outer edge of galaxies. In sum, standard gravity models explain the failure of galaxies to spin apart as due to a halo of dark matter at the outer edge of galaxies. (After: https://physics.stackexchange.com/questions/134159/what-is-a-flat-rotation-curve.)

In general, the only standard property dark matter possesses is gravitational mass. Unlike other matter, it does not apparently reflect or generate light. In fact, most now subscribe to the notion that this mass is non-baryonic, which in essence means matter of a form with which we are unfamiliar. That is, the consensus is that dark matter is probably not composed of ordinary matter such as electrons, neutrons, quarks, of any of the other identified elementary particles. Nothing like a nice, unsolvable, meta-physics narrative to attract research funding for truly large experimental apparatuses?

It must be noted that the shortage of visible matter is not small. NASA officially computes the divisions of matter to be 68% dark energy, 27 % dark matter and only 5% the type of matter that exists here on earth.2 So, it turns out that to hold the our galaxy, and others as well, together there must be approximately 5X more dark matter than standard baryonic matter. Wow.

Dark Energy

Notice in the above NASA list of materials that an additional non-baryonic substance is mentioned, dark energy (Ref 11-2). The existence of this matter is postulated to explain the relatively recent observation, from 1998, that the universe is expanding at an accelerating rate.3 Some force, dubbed dark energy, is pushing all galaxies apart. Only by the application of a force can objects be accelerated. Current computations indicate there is approximately 14 times more mass in the form of dark energy than the cosmological materials we here on earth have identified.

Wherein lies the origins of the dark energy concept? According to the Big Bang Theory, the universe should be expanding at a constant rate. This is a Newton’s Law expectation: shortly after the Big Bang banged, all matter should have attained a constant velocity. Indeed, according to Newton’s Second Law, an object in motion, not subject to an ongoing force, will remain in motion at a constant velocity.

The expected change, therefore, in the Big Bang model is a slight slowing over time due to weak gravitational interactions between galaxies, etc. pulling matter back together. (By analogy, once the string attached to the ball, per above, is cut, the expectation is the ball will fly away, but its velocity will gradually decrease because of frictional forces.)

Problem: the observation that galaxies are moving apart at a faster and faster rate, suggesting an active force is pushing/pulling them apart. This leads to this standard fix to the cosmological model: there is another form of energy, dark energy, spread throughout the universe that creates a force that pushes/pulls standard matter apart. Once the amount of energy required to cause the observed acceleration is computed, one can use Einstein’s equation for the equivalence of matter and energy, E=mc2, to determine the mass associated with this dark energy. Result: there is a tremendous amount of dark energy. In any event, it is clear that if this energy is converted into mass, it will not be the mass of ordinary matter. Indeed, it acts more like an “anti-gravity” source, pushing apart, not pulling together. OK, time to call Dr. Spock.

Models of Dark Matter

The preceding shows there are many rabbit holes to go down. The GUTCP provides a simple, concise, reasonable, and quantified model of both dark matter and dark energy. However, for this essay only dark matter will be considered in detail. (Those interested in the GUTCP explanation for dark energy can peruse Chapter 32, Gravity in the Source Text.)

It will be shown that once again, as with other facets of the GUTCP, compared to other theories that purport to explain the mystery of dark matter, only the GUTCP prediction that Hydrino is dark matter affords a quality (and testable!) model of the observed phenomenon. It is also the only theory consistent with a form of quantum theory. All the theories advanced by the astrophysics community are quantum theory free.

As a first step in reviewing dark matter models, it is critical to review the scientific process outlined earlier. This outline will be employed in evaluation of different dark matter models:

Given new data that cannot be explained directly with existing models, propose new ones.

Rate models on the basis of their alignment with existing physics. Those theories that i) do not require dramatic modifications, or even cancellation of thoroughly tested models, for example Newton’s Laws and Maxwell’s equations, or ii) do not require the existence of hitherto unobserved categories of matter, are preferred.

Determine if the proposed models are physics or metaphysics. Is it possible to test the model? Yes: Physics. No: Metaphysics.

For those models deemed to be proper physics, design and carry out experiments that are meant to debunk the theory/model. Remember, it is impossible to prove anything, but it is possible to falsify/disprove/debunk. Also, note physics, being about the “real world,” is an empirical science. If a model fits the data, it remains valid.

To determine the true value of any model that fails to be debunked, apply the quantitative requirement. Does it make quantitative and testable prediction? After all, the real significance of Maxwell’s equations, Newton’s Laws, thermodynamics and other successful physics models is they are quantitative and can be employed to engineer devices that work precisely as expected. Only quantitative predictions, demonstrated by experiment to be accurate, fully validate a physics model.

In the next few paragraphs a brief historical review of the concept of dark matter, a very old concept, is provided. Next, theory “categories” of modern models of dark matter are outlined, as well as some of the failed experiments designed to support them. Finally, the GUTCP model is compared with data, and shown to be arguable the only model which is consistent with all the data.

Dark Matter History

Early Greek astronomers, etc., more than two thousand years ago were the first to suggest the idea of dark matter, that is, matter present but not observable.4 With the invention of the telescope, it became clear they were largely correct. Those cosmological objects, stars, galaxies, and planets, that are only visible with the aid of a telescope were dark matter before Galileo pointed his telescope at the heavens. In fact, one of the early leading modern theories of the nature of dark matter is that there are still types of stars (e.g. brown dwarfs) that even modern telescopes fail to observe.

OK, it’s nice to know there is a long history to the concept of dark matter, but there is no need herein to consider the full arc of recorded history of dark matter. For this essay only modern discussions of invisible/dark matter will be considered. In particular, this brief history of dark matter begins arbitrarily with the suggestion that some unknown/unseen mass must be responsible for some anomalies in the movement of cosmic objects.

The mathematician Bessel argued the observed motions of the stars Sirius and Procyon required the presence of an invisible mass. Extrapolating that postulate, he hypothesized there may be “numberless invisible” stars.5 Other early modern discussions arose as telescopes became more powerful and clearly showed an irregular distribution of stars in the galaxy.

The question arose: why aren’t the stars more evenly distributed? An early hypothesis was that the stars are evenly distributed, but there are non-light emitting masses between observer and stars that absorb the light. In the current vernacular these would be dark matter clouds.6

More recently, observations consistent with the gravitational impact, per General Relativity, of great quantities of matter on the trajectory of light have been observed. That is, the mathematics of General Relativity indicates that the trajectory of light/photons is impacted by mass, thus light/photons are pulled toward mass. Given sufficient mass, light from a distance object will be curve around the large mass, hence the mass acts like a lens (Figure 11-2). In practice this means a circle/arc of light would be observed around a dark matter patch in the heavens, this arc generated by a large dark matter concentration somewhere between light source and observer. Arcs identified as such have been observed.

Figure 11-2: Gravitational Lensing- Light passing a massive body (e.g. dark matter) is bent, per General Relativity.

Several prominent physicists invoked the notion of unseen matter in the early 20th Century. For example, Lord Kelvin promulgated the notion that in the Milky Way galaxy there may be as many as 109 stars, perhaps 90% of them not visible. Yet, early quantitative analyses, for example by Poincare, and later by Kapteyn, suggested there was not much dark matter in the universe, or at least not more than visible matter.7

The early approximations of the amount of dark matter were based on much speculation, extrapolation, etc. and were later rejected. The first accepted quantitative, data-based analysis was provided by a recognized pioneer in cosmology, Fritz Zwicky. He employed some sophisticated mathematical models developed in thermodynamics to estimate the masses of galaxies based on observed average velocity dispersions. The observed velocity dispersion of the type of galaxy he considered, 1000 km/sec, was clearly larger than anticipated, 80 km/sec, based on a reasonable estimate of the size and mass of the galaxies studied determined using telescopes.

Zwicky’s conclusion based on this observation:

“If confirmed we get the surprising result that dark matter is present in much greater amounts than luminous matter.”8

In other words, he was the first to suggest there isn’t enough gravity in the lit sky to create the gravitational forces needed to keep the galaxy together. And, to keep it together, galaxies must have more invisible than visible matter. This, and other analyses regarding the motion of stars in our own galaxy, other galaxies, and even groups of galaxies, led repeatedly to the conclusion there isn’t enough mass to create the gravity needed to hold galaxies together. Most of the mass of the galaxies must be “dark.”

Gradually, the unresolved dark matter issue became more central to the astrophysics world. Dark matter is no longer something occasionally discussed in astrophysics circles, rather, now it is central to many careers, leading to thousands of publications on the topic.

Modern Models of Dark Matter

As shown in the figure below (Figure 11-3) there are an array of families of proposed dark matter models. As discussed below, the situation of multiple proposed solutions to the nature of dark matter exists because there is literally no evidence that any orthodox model is viable (in contrast to the GUTCP model). In the absence of evidentiary support for any model, lots of theories can be promulgated.

All models should be considered employing the five-step heuristic for model evaluation given above. This proposed scientific evaluation method indicates the mainstream (i.e. acceptable for publication in astrophysics journals) models of dark matter all fail some key tests. The models require:

Unknown forms of matter.

Unknown forms of interaction, that is, they need fundamentally new laws of physics governing their interaction with other matter.

Are totally qualitative.

Have zero, nada, experimental support.

Generally are shown to be incompatible with well-established experimental data.

In contrast, as discussed below, some proposed solutions, specifically all three dark-matter-is-a-form-of-hydrogen models are simply ignored by the mainstream physics community. Yet those models are easier to fit to the scientific process, as they all postulate dark matter is a form of regular matter, i.e. baryonic matter, that obeys classical physics laws. In particular, the GUTCP version of dark-matter-is-a-form-of-hydrogen is far from debunked, as it predicts many observations.

Below, several illustrative models from Figure 11-3 are reviewed in detail. All are found to be less than perfectly fitted to the observed data.

Figure 11-3: Map of Dark Matter Models- Included in this modified map are models that attribute dark matter to some form of hydrogen, including the model that dark matter is Hydrino. (Modified From Ref. 11-9)9

Mainstream #1- MOND, Models Denying the Existence of Dark Matter

The first family of mainstream physics/astronomy models to consider are Modified Gravity. These models, variations of Modified Newtonian Dynamics (MOND), initially proposed and developed by Mordechai Milgrom, Weizmann Institute, Israel, are an effort to attribute the lack of sufficient mass to hold galaxies together to a misunderstanding of gravity.101112

The correction to gravity offered by the MOND theory is expressed mathematically as:

It is understood this correction only applies in the limit of very low accelerations (a ≪ a0 ∼ 1.2*10−10 m/s2). Is this really low? Yes, the acceleration due to gravity on the surface of earth is more than 10 orders of magnitude greater. Reactions to this proposed solution, which does appear to roughly account for the gravitational anomalies arising from visible matter, rather than requiring the existence of some unobservable dark matter, were mixed.

It was pointed out early on that MOND theory did not conform with energy conservation, momentum conservation, or angular momentum conservation. In the case of MOND, the divergence from classical theory was accepted as a major defect (unlike SQM, for which clear inconsistencies with classical physics are apparently regarded as positive features), hence subsequently efforts were made to make the theory consistent with classical physics.

Newer MOND variants appear to bring it into general agreement with classical conservation laws, but not necessarily with all of relativity, nor with some experimental observations such as gravitational lensing and observations of material distributions arising from colliding star clusters. For the latter, it is agreed the distribution is unexplainable without the presence of actual dark matter. Thus, the present experimental evidence, what little there is, indicates MOND, even with super complex mathematical variations, is not a viable theory.13

It should be noted that if MOND matched all observations, it would meet the criteria of a good model as it does not require any unknown material, involves a reasonable modification of classical physics, and is quantitative and testable.

Mainstream #2: Massive Astrophysical Compact Halo Objects (MACHO)

Another set of theories, ones that postulates dark matter is baryonic and consistent with classical physics of gravity, are those that postulate the identity of dark matter is actually “compact” objects, essentially small, dim stars, or perhaps black holes, that are not visible to radio or light telescopes. If dark matter were to be small dim stars in the outer reaches of galaxies, present in sufficient quantity to explain the observed flat rotation curves, there would be no need to postulate non-baryonic matter. Dark matter would be shown to be ordinary matter, hence the MACHO model would fit the requirements of a quality model outlined above.

In order to validate the MACHO model, an international group of physicists/astronomers studied millions of stars over multiple years. The result was again a demonstrable debunking of a set of models of dark matter. The study concluded, based on enough data to provide high quality statistical bounds, that the maximum amount of mass due to MACHOs in the halo of the Milky Way is very low.14

Specifically, they found only one clear example of a single microlensing event in the 7 million stars studied, whereas if the halo of the Milky Way was composed completely of MACHOs, they would have observed 39 events. This led to the statistical conclusion that no more than 8% of the halo is composed of MACHOs (at most). The other 92+ % of the matter in the Milky Way appears to be something other than stellar like objects.

Mainstream Model #3 WIMP, Unknown Particle Models

As shown in Figure 11-3 there are a number of particles proposed as the identity of dark matter including bosons, neutrinos, and a previously unidentified form of matter called Weakly Interacting Massive Particles (WIMPs). There is no reason to review all the particle-identity options, as this has been done very well elsewhere, and the conclusion firmly reached is that only the WIMP model is potentially correct. All the others fail to explain many observations. Thus, only the WIMPs model, currently the model favored by most members of the astronomical community, is reviewed.

This is a good point in the narrative to reinforce a concept introduced in Chapter 2: science is not a democracy. A model is not validated by voting. Ideally, validation in physics requires a mathematical model that is predictive, and subsequently, data that supports the model, is sought and found. The analysis below is intended to show the WIMP model is not validated, irrespective of its popularity.

Moreover, it is not clear that WIMP is a good scientific model according to the list of good model requirements given above. Indeed, it requires an entirely new form/previously unidentified form of matter that interacts with standard baryonic matter with new physics laws that are not predicted, nor even quantitatively postulated by its advocates. In a sense, it is a transformation of one kind of unidentified nebulous matter, into another type of nebulous, but more familiar, matter. That is, the model requires the nebulous stuff is in the form of particles (single type), which makes it agreeably similar to all other mainstream matter, which is always “particle”…Except for elementary particles in SQM which are also not particle, but rather probability distributions (Ch. 4)?

The WIMP model is not only not mathematical or quantitative, but also “flexible.” It is so flexible it is difficult to debunk. WIMPS are simply defined as particles of non-baryonic matter, generally considered to be larger than a hydrogen atom in terms of mass/energy, of unknown and undefined nature. So, the WIMPs model is that there is a huge halo of large mass, non-baryonic particles around galaxies with the property of gravity, but otherwise extremely weakly interacting with other matter. Thus, the experimentalist is tasked with some remarkably vague instructions for finding WIMPs: some sort of big particles, with no prediction regarding how big; in fact, particles that may not even follow any known physics laws.

Flexible models of this type are hard to “kill off” as they are so vague that any negative test outcome can be shrugged off with the argument: “You didn’t look in the right place on the mass scale. And you didn’t look for the proper interactions that would make it visible.”

The response would be: “Where is the right place? How to make it interact and become visible?”

And this would be answered by: “It could be anything, and to find interactions might require a technology we do not have.”

The natural key question that arises based on the earlier discussion of science vs. metaphysics becomes: is the WIMPs model metaphysics? Difficult to answer absolutely, although this author leans strongly to identifying the WIMPs model as metaphysics, regarding true science as a narrow path.*

(*Author’s Note: The science-by-voting problem observed in the adherence to the WIMPs model has echoes in many “science”-based issues we confront repeatedly. A relevant example, but not the only one: Is “global climate change,” as opposed to “global warming,” science or metaphysics? After all, global climate change makes no quantifiable predictions. The model is vague/flexible (political?). No matter the outcome, adherents to the global climate change model claim “science” indicates human behavior caused it. The temperature of earth got hotter? Obviously human caused. Got colder? Humans! More storms? Human actions! Fewer storms? Human actions! No change? Just wait, it’s coming. In contrast, “global warming” is at least qualitatively predictive, hence a scientific hypothesis that is subject to testing. Lesson: beware fuzzy models.)

Herein it is impossible to review the full extent of efforts to find evidence of WIMPs as there are thousands of papers modelling WIMPs and many, many papers reporting on extremely complex experimental studies. The bottom-line-up-front (BLUF) of this brief review: No direct or indirect evidence of a non-standard model large particle (WIMPs) has been produced.

Below, we briefly review the two primary types of experiments and the null outcomes therefrom. The first type are those designed to look for collisions between weakly interacting WIMPs and ordinary matter. The second type looks for evidence of the outcome of annihilation reactions between two dark matter particles, which some models postulate can occur, leading to the production of known forms of matter such as neutrinos, high energy gamma rays, or possibly standard model particles such as cosmic ray antiprotons or even positrons.

WIMPs Model Direct Collision Testing

The experimental programs designed to observe direct collision between baryonic matter and dark matter all depend on some type of scintillator. What is a scintillation detector? It is a device that takes the energy from an incoming particle, and from it generates a light pulse that can be characterized and counted.

There are many customized scintillation devices , engineered such that each event associated with the given study is “countable.” For example, the size of the scintillation chamber must be optimized. If there is a strong interaction between the incoming particles and the scintillating material, the scintillating chamber is made small such that relatively few collisions can take place, thus reducing the likelihood of two light producing processes occurring in the same instant. It is not possible to properly count overlapping processes for many reasons. If the interaction is presumed to be very poor (e.g. WIMPs and ordinary baryonic material) the detector chamber should be made as large as possible, or counting will take too long, be statistically questionable, or it is even possible everyone initially involved will have passed on to a better place before there have been a sufficient number of events for statistical certainty.

A second issue, particularly germane to weak interactions, is distinguishing noise from signal. Generally, scintillation detectors will scintillate because of all sorts of particles entering the chamber. It is often impossible to distinguish which type of reaction created the light pulse. If it can be established, for example by control studies, that the signal is thousands of more times more likely to arise from the desired reaction than from “noise” reactions, the noise problem can be ignored. If the number of “noise” reactions is of the same order, or even greater in magnitude than the target reaction: problem!

Thus, to study “weak interactions,” such as those postulated to occur between ordinary matter and WIMPs, it is necessary to block/shield noise sources, that include decay of nearby radioactive particles, gamma rays from the sun, neutrinos, etc. In practice, for WIMPs detection only after these false positive producers are thoroughly screened is it possible to observe scintillation from the desired process in a statistically meaningful fashion.

WIMPs experiments are large and expensive because of both the need for superb screening and the need of very large, special detectors, preferably held at cryogenic temperatures to eliminate even thermal noise. Indeed, WIMPs experiments are generally organized to be at the deepest part of retired mines as this puts them in a place where solar radiation of any type cannot “trip the switch,” hence eliminating a significant source of noise. This means building a large detector, composed of exotic, hyper-purified, and invariably expensive materials, along with a cryogenic system, deep in the bowels of the earth. Other noise, such as that created by neutrinos that can traverse virtually any material, or from local radiation sources are probably impossible to fully screen, but means to identify pulses associated with these events (e.g. based on exclusion of light signals of wrong possible magnitude, or obviously wrong duration) can be devised.

There are many examples of WIMPs experiments placed in deep mines to minimize noise signals. Notably, after many years, and many experiments, none of these multi-million dollar efforts provided evidence of dark matter/baryonic matter collisions. One example is the Sanford Underground Research Facility located in the Homestake Mine in South Dakota, in which scientific experiments, including dark matter detection, are conducted nearly one mile underground. The facility is large, deep, and cold: they constructed the LUX dark matter detector and filled it with a third-of-a-ton of cooled super-dense liquid xenon. The xenon is surrounded by powerful sensors designed to detect the tiny flash of light and electrical charge emitted if a WIMP collides with a xenon atom within the tank. The detector’s location beneath nearly a mile of rock, and inside a 72,000-gallon, high-purity water tank, helps shield it from cosmic rays and other radiation that would interfere with a dark matter signal.15

Other deep mine results can be found by searching these studies: Cryogenic Dark Matter Search (CDMS), the Experience pour Detecter Les WIMPS En Site Souterrain (EDELWEISS), and the Cryogenic Rare Event Search with Superconducting Thermometers (CRESST) Collaborations.

Are there any significant results? To quote Bertone’s excellent review:

And although results from the CoGeNT, CRESST, (CDMS experiments were briefly interpreted as possible dark matter signals, they now appear to be the consequences of poorly understood backgrounds) and/or statistical fluctuations.

In plain English: No signal detected.

But, Bertone, presumably speaking for the community, claims success!

As CDMS, EDELWEISS, XENON100, LUX, and other direct detection experiments have increased in sensitivity over the past decades, they have tested and ruled out an impressive range of particle dark matter models.

In plain English: Lots of sound and fury, lots of fantasy models tested, much money and talent employed, signifying nothing.*

(*Author’s Note: This author recalls one of the “Jason Bourne” movies in which the bureaucrats from a three letter agency who led the obviously failed “Treadstone” project testify to a committee of the US Congress that the proper response to failure is to spend even more money of a more advanced version, “Blackbriar.” Similarly, any failed large, expensive, experimental physics program is used as a justification for an even larger, even more expensive, program, purportedly intended to improve and succeed, but generally destined to failure as well….see fusion energy.)

The above analysis is clearly from a skeptic. What is the alternative perspective? What is it exactly that the standard physics community players claim is a success? The most significant result of these studies is a determination of the maximum possible collision cross-section between dark matter and normal matter. In other words, a null result implies a maximum cross section. The logic: if the cross section were this size or larger, something would have been detected. Nothing detected, hence maximum cross section determined. A lot of assumptions and a lot of interpretations of limited data are required to make these “quantifiable” determinations.

How small are the current maximum cross sections determined from “failure to observe” interpreted as success? The typical nuclear particle cross section is about 10-28 m2, on the order of 20 orders of magnitude larger than the maximum permitted cross section for collisions between dark matter and baryonic matter based on the most highly sophisticated experimental failures to detect dark matter conducted to date.

What exactly does twenty orders of magnitude imply? Analogy: if the earth is used to represent a magnitude cross section of one, then an object with a diameter of ~1mm would have a cross section 20 orders of magnitude smaller. The “W” in WIMP is for very, very, very, very, very, very, very weak.

Figure 11-4: Failure to Observe Dark Matter Collisions with Baryonic Matter- In this figure the failure to observe any collisions between dark matter and ordinary baryonic matter is shown to limit the upper limit cross-section for dark matter. At present that upper limit is ~1020 smaller than the cross section of a hydrogen nucleus/proton. The shapes correspond to detector employed: cryogenic solid state (blue circles), crystal (purple squares), liquid argon (brown diamonds), liquid xenon (green triangles and threshold (inverted orange triangle). (After Ref 11-16)16

Annihilation Studies

As noted earlier, there is a hypothesis that dark matter WIMP particles can annihilate each other and, in the process, produce particles of known form. Properly designed and conducted experiments looking for these particles should reveal evidence of these proposed annihilation processes, hence provide indirect evidence of the existence of WIMPS.

The first difficulty with this model of dark matter behavior is what might best be called the “Dr. Spock” problem. To wit: in many “science included” movies/programs, for example Star Trek, there is often a need for some novel scientific sounding postulate to enable the drama to proceed. In Star Trek, the repeated plot device is for Dr. Spock to deliver an absurd physics hypothesis. Spock delivering these absurdities doesn’t matter. It’s only a plot device, and it’s fun. The problem is Spock-type reasoning sometimes, and perhaps devastatingly (is there any rational basis for Gain of Function research involving human pathogens?), leaks into real life. The notion of WIMPs colliding to create some form of baryonic matter is Spock-like science.

Indeed, there is no experimental evidence that non-baryonic matter is particulate in nature, and less than zero evidence that these hypothetical particles interact, let alone annihilate, and absolutely no reason to suspect when they do theoretically annihilate that normal matter is the product. This whole line of reasoning is a mystery wrapped in an enigma fantasy.

This author is not the only physicist to recognize the “Spockian” feature of these models. To quote Bertone et al. (Ref. 11-4):

In the 14 February 1978 issue of Physical Review Letters, there appeared two articles that discussed, for the first time, the possibility that the annihilations of pairs of dark matter particles might produce an observable flux of gamma rays. And although each of these papers [by Jim Gunn, Ben Lee,17 Ian Lerche, David Schramm, and Gary Steigman (Gunn et al., 1978), and by Floyd Stecker (Stecker, 1978)] focused on dark matter in the form of a heavy stable lepton (i.e., a heavy neutrino), similar calculations would later be applied to a wide range of dark matter candidates. On that day, many hopeless romantics became destined to a lifetime of searching for signals of dark matter in the gamma-ray sky.

Perhaps an even greater difficulty in this model is the experimental approach is invariably fundamentally flawed. As pointed out elsewhere (Bertone), the detection of any of the purported forms of baryonic matter derived from annihilation of dark matter can always be explained by many ordinary baryonic material processes. In other words, if there were signal from the proposed process, it would be lost in the noise.

In sum, searching for evidence of dark matter annihilation is a Sisyphean task best taken up by hopeless romantics.

Non-Mainstream Models/Hydrogen Models

No dark-matter-is-a-form-of-hydrogen conjecture ever seems to make the top ten hit list, or any list, for the mainstream/legacy astronomy community. Always stamped Cancelled by the cognoscenti.

The notion that dark matter is simply hydrogen does not clear the hurdle into the allowed/not-cancelled, motley collection of debunked/metaphysics models for dark matter heralded by the mainstream physics community. Hence, for this manuscript the depicted family of models (Fig 11-3) had to be modified to include the family of hydrogen models.

Perhaps being cancelled is a good thing for the hydrogen models. From a rational perspective being associated with any of the accepted models is like being too closely affiliated with cold fusion. Cold fusion and acceptable dark matter models share features, such as occasional whiffs of evanescent and unrepeatable data in support of the models, lack of mathematical expression, no quantitative aspects, hand-waving explanations, and endorsement by hopeless romantics. In contrast, it is shown below that one of the hydrogen models, the GUTCP model, makes quantitative and precise predictions regarding observables based on classical physics that arise from the production of Hydrinos/dark matter. And, lo and behold, these features are observed.

There are three dark-matter-is-hydrogen models. The first is that dark matter is simply ordinary hydrogen, the second is that there are two types of hydrogen atoms, one “normal” and the other dark, and the third model is the Hydrinos are dark matter GUTCP model. This model is based on simple classical physics postulates and fully quantitative. It postulates processes are always taking place in stars and the intergalactic medium (IGM) that result in normal hydrogen converting to Hydrinos, resulting in the generation of specific wavelength light. Lo and behold, light of the predicted frequencies, with the predicted wave shapes are observed! Still, the mainstream physics community refuses to acknowledge even the existence of this correlation.

Hydrogen Model #1: Ordinary Hydrogen

The ordinary hydrogen-is-dark-matter hypothesis is the oldest hydrogen model and was first presented in the 1950s. After many studies and computations, there is no change from the original consensus: there is not enough standard hydrogen to account for the observed gravitational effects.17 As hydrogen spectral lines can be measured, it is reasonable to make approximations regarding the amount of atomic and molecular hydrogen in various forms in interstellar space.

Some work also was done to look at hot hydrogen, that is, ionized hydrogen/protons.18 The existence of free protons was rejected as implausible. The model requires a very high temperature (see Ch. 9) to maintain hydrogen in an ionized state. It was considered implausible to suggest such high temperatures existed in deep, cold space. (In those days plausibility was an actual argument.) To date, those observations suggest the free hydrogen mass, all forms, is no more than 10% that of the material in the visible stars. This is clearly not sufficient to account for the gravitational effects of dark matter. Proper physics here: a reasonable theory was presented, data to test the theory collected, and the theory declared untenable because data did not support the hypothesis.

One argument that could be employed against the hydrogen-is-dark-matter hypothesis, as well as the other two hydrogen hypotheses, is that hydrogen will trigger the scintillation counters buried deep in the caves, just as WIMPS should. That is, those counters might be devised to detect WIMPS, but why not hydrogen?

There are several reasons those counters will not detect any form of hydrogen. First, hydrogen has a very large cross-section for interaction with normal matter. If dark matter is a form of hydrogen, burying the counters deep in the earth will guarantee no dark matter will reach them from the production sources somewhere off planet. Any form of hydrogen/dark matter reaching the earth from cosmic sources will react with the baryonic material composing the atmosphere or the solid earth long before reaching the deeply buried detectors. Second, the vessels containing the detectors are designed to prevent any material from the natural environment, including hydrogen, from leaking in. Third, none of the hydrogen models require the hydrogen to be at all hot. (Even if the hydrogen arrived “hot” to the earth’s atmosphere, it would be thermally equilibrated long before it reached a detector deep in a mine. WIMPs in contrast are by theory so extremely weakly interacting they should reach the detectors without interaction, hence will maintain constant thermal energy. Cold hydrogen dark matter will not have sufficient momentum to trigger the detectors, and in any event will be indistinguishable from hydrogen generated by normal chemical processes. Fourth, the detectors, because of the sufficient momentum issue, are not able to detect matter with less than many GeV of energy/mass. Hydrogen is under one GeV, hence the detectors are not sufficiently sensitive to observe hydrogen.

Hydrogen Model #2: Two Forms of Hydrogen

A novel solution to the relativistic form of Schrodinger’s Equation, the Dirac equation, was recently proposed for electrons in any s-orbital, irrespective of the principle quantum number n.19 According to this solution there are in fact two forms of hydrogen, one the “normal type” which chemically reacts in the standard manner, and a second type, dubbed alternative kind of hydrogen atoms (AKHA), which is largely unreactive, and is postulated to be the identity of dark matter.20

It must be noted that the model is distinctly different from that of the GUTCP in several significant fashions. First, unlike the GUTCP, it does not postulate the existence of lower energy forms of hydrogen. In fact, the model indicates that the AKHA energy states, ground state and excited states, are fully degenerate, that is, have the same quantized energy levels as their matching states for normal hydrogen. Thus, for example, the true ground state, minimum energy level for a hydrogen atom in the normal and AKHA states are -13.6 eV.

The theory, like many quantum theories, requires novel aspects to satisfy theoretical mathematical regulations of quantum. The most intriguing is the need for an additional quantum number in order to allow for the proposed energy degeneracy of regular and AKHA hydrogen. This can be understood by reference to the extra quantum number required by the so-called PEP, discussed in detail in earlier (Chs. 4, 5, and 6). For example, the ground state electrons in helium have the same energy according to SQM, and this requires the existence of a quantum number for spin, an extra quantum number required such that two electrons can “legally,” distinct set of quantum numbers, have the same energy. That is, the relevant mathematical regulations of SQM for multielectron systems is that no two electrons can have precisely the same quantum numbers. Of course, this directly contradicts another requirement of SQM: electrons are indistinguishable (Ch. 4). Nothing to see here, move on.

Different spin values for electrons, hence different quantum numbers, permit multiple occupancy of a particular orbital in SQM. For example, the two electrons in ground state helium are postulated to have the same energy, and same wavefunction/probability distribution, but as they have different spin quantum numbers, this is allowed. Degeneracy is permitted as the different spin values satisfy the mathematical regulations regarding degenerate states.

In a similar fashion the author of the AKHA theory believe it is necessary to postulate the existence of yet another form of spin, Isohyspin, to mathematically permit the existence of degenerate states in hydrogen atoms, and thus allow for the existence of AKHA states. As there never are two electrons in a one electron hydrogen atom, why be concerned with a degeneracy issue? The spin postulate does explain a requirement of the dark matter hypothesis of this AKHA model. If there is a conserved quantum number, isohyspin the two types of hydrogen cannot rapidly shift from one type to the other. The argument appears to be that once hydrogen was born at the Big Bang as one type or the other, it was “stuck” in that form. In other words once a hydrogen is born as one spin form, AKHA physics requires it stays in that form forever, because this novel physics requires the isohyspin number of an atom be conserved. Thus, the “dark” form of hydrogen is dark forever.

The author of the theory suggests that the alternate hydrogen would not couple to gravity or to electromagnetic forces. In other words, it would have all the required “non-interaction with other stuff” properties required of dark matter:

Additionally, there seems no ground to expect that the isohyspin would couple to the gravitational force/interaction. As for the electromagnetic force/interaction, the (ordinary) spin couples to the magnetic field, but the isospin does not couple to the electromagnetic force/interaction. Therefore, there seems to be no reason for the isohyspin to couple to the electromagnetic force/interaction either.

Note: the theory of alternative hydrogen is NOT popular in the physics community, virtually all citations being “self-citations.”21 Is this meaningful?

In sum, the AKHA model is intriguing as it purports to identify dark matter as a form of the most abundant material in the universe: hydrogen. It also purports to employ standard quantum, albeit the Dirac relativistic equation, to predict the existence of alternative states of hydrogen. Moreover, it even provides a semi-quantitative explanation regarding why this form of hydrogen would be “dark,” that is, non-interactive. According to the author of this monograph, this model should be taken seriously because it does align with many of the requirements of a reasonable physics model as outlined earlier in this chapter. Also, it does have an underlying quantum theory making it part of a larger version of all of physics.

That said, there are issues with the AKHA model. First, the math is too obscure for the non-specialist, including this author. However, this author can apply the same general criticisms of SQM, addressed in detail in an earlier chapter, to this model. Indeed, it has all the same short-comings: the net outcome of the math is a probability distribution/wave function which is physically incomprehensible multi-dimensional gibberish in a multi-electron system, requires the electron to be an infinitely small particle, requires approximations to work around infinities for distribution within the nucleus, etc. Second, unlike the GUTCP it does not provide any predictions regarding multi-electron systems. It does not overturn the PEP, it does not predict ionization energies of any multi-electron systems, it does not predict Balmer line broadening in stars, or excess heat in some plasmas, etc.

Finally, it is again not clear if it is physics or meta-physics. The author of the AKHA model does not provide any clear experimental approach to demonstrating the existence of the alternative hydrogen. It is only claimed : i) the model is consistent with AKHA being dark matter and ii) the model could explain another classic problem in physics; the experimental high-energy tail of the linear momentum distribution in the ground state of hydrogenic atoms. But nowhere is there an indication of how one should collect additional supporting data, as it does not predict any observation to look for, etc.

The reaction of the physics community to the AKHA model is yet another example of the main stream physics community reaction to anything outside the standard paradigm, or promoted in certain circles: ignore/cancel. Certainly, the suggestion that an alternative form of hydrogen, one consistent more or less with the Dirac equation, is closer to being an acceptable scientific model than the existence of Unobtanium, aka WIMPs, yet only the latter gets any attention, and literally millions of dollars in support annually.

Hydrogen Model #3 and Winner!: Dark Matter is Hydrino (DM/Hy)

The final hydrogen family model is that dark matter is Hydrino material. This author asserts the GUTCP model is definitely not debunked, hence remains a valid theory.

The main criterion for rejection of a model is failure to be consistent with observation. Any model that is consistent with all observations remains valid. Not only is the DM/Hy model consistent with all observations, but it also predicts specific spectral features, and subsequent observations are consistent, precisely, with the predictions. Moreover, the predictions require in all cases only three dimensions, no non-baryonic matter, no modifications to the Law of Gravity, and only classical physics equations, pre-1872. As always in the case of science, the model is not proved, hence the objective here is simply to demonstrate the model remains valid.

Let us review some earlier material in this book relevant to the proposition that the DM/Hy hypothesis remains valid. First, as discussed in the Hydrino Hypothesis chapter, the GUTCP predicts that during the two-step process for creating Hydrinos, certain wavelengths of light in the EUV part of the EM spectrum are produced. Years after this prediction, data was collected that was quantitatively consistent with these predictions both in terms of the frequency of the light and the Bremsstrahlung-type peak shapes.

Moreover, the lines are produced in an environment (interstellar medium, ISM) containing a high concentration of helium, one of the GUTCP-identified catalysts for the Hydrino production process. Alternative theories for explaining these lines require the ISM to have considerable concentrations of iron, oxygen, and other heavy species. As discussed in detail in Chapter 8, those models also require the very thin ISM gas to be extremely hot, up to millions of degrees C, and to hold these super high temperatures for many, many (Thousands? Millions?) years without providing a mechanism to produce the energy, or a mechanism to explain why hot, very thin, gas will not nearly instantly radiate away its heat to the extremely cool background of space. The plausibility argument for rejecting a model seems to have been abandoned.

Second, consistent with observations made in the laboratory regarding extreme Balmer series line broadening, similar broadening has repeatedly been observed in stars (Chapter 8). This broadening indicates the creation of atomic hydrogen with spectacular energy. Once again, as per the GUTCP theory, in both cases the broadening occurs only in plasmas with high concentration of predicted catalyst species.

Third, for complex electrodynamical reasons, Hydrino is predicted to not interact with light as ordinary matter does. Stated simply, it is predicted to be “dark.” The dedicated reader is encouraged to peruse the Hydrino chapter of the Source Text for a full explanation.

Before proceeding with additional arguments regarding the agreement between DM/Hy and observations, it is important to note that these arguments are not intended to prove the model is correct. The arguments made are only intended to show the theory has not been debunked. This has a bearing on counter arguments of this variety: “the physics community has an alternative explanation for observed line broadening in stars. Specifically, it is due to extremely high ion concentrations in these stars leading to Stark broadening.”

This author’s analysis is that attributing line broadening to high concentrations of charged particles/Stark broadening is a specious argument because there is no direct measure of ion concentration in stars and the estimated ion densities are many orders of magnitude higher than ever achieved in terrestrial plasmas. In fact, generally back-think is used: “we observe this line broadening, thus the ion density in stars must be this to reach agreement with Stark theory.” That is, it is assumed, without mentioning the assumption, that all observed broadening is due to the Stark effect. In any event, the required super high ion densities are surmised from the existence of line broadening. The argument is clearly a bit circular.

In fact, there is no need to argue which is the superior model. The primary point in this monograph is that alternative explanations for a phenomenon cited here may exist, without those arguments undercutting the DM/Hy hypothesis. Two arguments can be consistent with an observation. Both are valid until further testing and data demonstrates one of them is clearly false. Neither the physics community nor this author can dismiss arguments just because they are not favored. Science is not a democracy, nor personal, nor about experts.

The next argument regards various heat effects. As discussed in Chapter 10, observed excess heat in plasmas is consistent with the general Hydrino Hypothesis. The formation of Hydrinos results in the release of energy. Very quickly, in most environments, that energy converts to heat. (This author presented seminars in the first decade of the present century regarding the GUTCP in a number of places entitled, “Burning Water,” as the excess heat observed in water plasmas is postulated to result from a form of “combustion” in which hydrogen atoms in the water molecules are effectively “burned” to create a Hydrino ash.)

Of particular significance is the postulate that Hydrino formation, and the resultant heat production, can explain not only dark matter, but also a second great mystery of cosmology: why is the corona of a star orders of magnitude hotter than the surface of the star? The surface of the sun is of the order 6000 K, and the corona is variously measured to be 200 to 500 times hotter. A reasonable average indicates the corona is well above 1 million degrees K.

One of the underlying issues is the need for consistency with the second law of thermodynamics. According to this law entropy is always net generated in any thermal interaction between two bodies. This is turn requires that heat always travels from hot bodies to cold bodies. It is a requirement of thermodynamic theory consistent with common experience. Hold ice; note heat travels from the hand to the ice, accelerating melting, and cooling the hand, as the two bodies move toward thermal equilibrium. Or note heat from a camp fire warms the observer, and not vice-versa.

Apparently, the corona/solar surface duo is an exception for a thermodynamic law which has never previously been challenged by data. Somehow the relatively cold surface of the sun heats the much, much, much hotter corona, a lot. This can be stated in many ways, for example:

Since there is no plausible source of energy further out in the corona to heat up the corona, we must assume that some form of energy other than heat is supplied from below the corona to realize its million-degree temperature. This is the coronal heating problem discussed in the literature.22

Virtually all of the standard explanations involve the sun depositing magnetic energy into the corona:

The energy source of flares is believed to be the magnetic field near sunspots; The stressed magnetic field with field lines directed in the opposite directions coming closer will release magnetic energy explosively. This process called magnetic reconnection will not only heat the plasma, but also eject plasma clouds to the interplanetary space. (Ref. 11-22)

There is a fundamental issue with all the versions of models relying on the deposition of energy due to magnetic collapse/reconnection: experience and logic indicate that magnetic fields can collapse only upon the addition of energy. Indeed, it has long been known that to demagnetize a magnet requires heating it above its Curie point. Once this is done, the field disappears, but no heat is released. This is an example of a common observation: it takes added heat to make a magnetic field disappear.

Another example: to cool objects below the temperature of liquid helium, scientists place magnetic materials in a very cool compartment on top of electromagnets which are at room temperature. On top of the magnetic materials a sample is placed. The magnetic material, not the whole material, just the magnetic sub-system within it, can be said to have a temperature of zero (or arguably negative) because the field of the electromagnets is strong enough to prevent any motion of the magnetic moments in the magnets. All micro-magnetic fields align perfectly, without possibility of motion, with the applied macro field.

Yes, one can have two sub-systems in the same solid form at different effective temperatures! In this case the magnetic sub-system of the magnet can be absolute zero, while the rest of the magnetic material is at liquid helium temperature (~ 4.2 K).

Next step in the cooling of the sample requires turning off the electromagnets. After removing the electromagnets holding the magnetic system at zero K, heat will flow into the magnetic sub-system from the rest of the magnetic material and from the sample in thermal contact. The magnetic system “heats,” measurably decreasing the magnetic field strength, and simultaneously the rest of the magnetic material, and the sample, cools. The sample temperature, and the magnet material temperature equilibrate somewhere between liquid helium at 4.2 K and 0 K. The flow of heat into the magnetic sub-system is consistent with the second law of thermodynamics: heat flows from the “hot” material to the “cold” magnetic system. Once again, to reduce/collapse a magnetic field, energy must be added. The magnetic sub-system heats via energy transfer from the magnetic material and the sample.

A little consideration informs that there is in fact no loss of magnetic energy when the field collapses. Magnetic fields are the sum of the magnetic moments of individual atoms. During demagnetization these atoms do not lose their fields. All that happens is that the fields of the atoms no longer align, hence no longer create a net field. All the fields are now pointed in random directions, but each atom retains its magnetic field.

Figure 11-5: MES Cell- One key feature, as described in the text, was the magnetic heater which comprised a bi-directional wiring. The heating wire was doubled up, then wrapped around the center cylinder as shown. This eliminated the creation of a net magnetic field and concomitantly a noise motor drive signal. A- Thermocouple feed-thru, B- Ground glass joint for attaching carbonyl transfer bulb, C- Liquid nitrogen reservoir, D- Sample, E- Beaded Heater, F- Conflat Flange, G- Vacuum line, H- 131 Kapton windows, squeezed and heat sealed, I– Heater wires. Nothing happened to the magnetic moments created by the currents, still present. The fields created by opposite direction currents simply cancel. There was no loss of “magnetic energy” after the wire was re-wrapped. (After Ref. 11-23)23

The point that each atom retains its fields is reflected in demonstrated macroscopic examples with fields from currents in wires. A good example is drawn from the author’s own experience. One of the first engineering problems this author had to solve as a novice graduate student was to design a cell that permitted heating a sample for a Mossbauer Spectroscopy system to a high temperature (>400 C) in a vacuum, or under controlled gas flow. The cell had to have a short path (ca. <10 cm) between radioactive source and scintillation counter, allowing maximum solid angle between source and detector, thus maximizing signal, and had to be designed such that the only solid material intersected by the gamma particles in their path to the detector, was the sample (Figure 11.5). Indeed, the only signal was to be absorption of select energies by the sample, hence any material other than the sample in the path created noise. The cell design allowed the sample to be heated electrically by passing current through a high current, high temperature wire wrapped around the sample cylinder.

On the inaugural trial a problem arose. It became clear that the current passing through the heating wired created a magnetic field that dramatically impacted the operation of the magnet driven motor, less than 5 cm away, carrying the radioactive source. The result was very erratic motor behavior and the production only of a very noisy signal. Inspired solution: take the heater out, “double up” the wire, creating a heating wire consisting of two parallel wires, each with current going in the opposite direction, then re-wrap. Now, the heating current would make two magnets, one creating a North end near the motor, and one creating a South end near the motor. The fields should cancel. Solution successful! Motor worked, noise eliminated, indicating the hypothesis of cancelled fields was correct.

Did the doubling up to produce equal/opposite lead to magnetic collapse? Was there a reduction in the field energy? No and no. Each tiny element of the wire with current passing through produced the same field as before, but once the wire was doubled up the net vector field at each point is space was near zero. Fields still there, but net field zero. (For my tens of fans, I am announcing: the proper treatment of infinite fields, the significance of net field and field cancelling effects, will be the theme of my next book.)

In sum, given a rational analysis of the implausible suggestion that collapsing magnetic fields, or some sort of “energy bomb delivered by fields,” heats the corona to a temperature more than 200 times that of the solar surface, perhaps it is reasonable to conclude that at the moment there is no plausible model for the surface of the sun heating the corona and violating the second law of thermodynamics.

The GUTCP provides an alternative model, one consistent with all the laws of thermodynamics: Hydrinos are forming in the corona, and this process leads to coronal heating. Indeed, the corona in this model transfers heat to the solar surface, in conformity with the second law of thermodynamics. That is, dark matter is generated in the corona of stars where there is both fuel (atomic hydrogen) and catalyst (helium)in high concentrations, releasing tremendous heat energy. In sum, the corona generates Hydrinos, and that is the energy source for the >1 million K temperature.

Hydrino formation in the corona might explain another mystery: why isn’t neutrino production, current accepted paradigm, enough to explain the total radiative energy from the sun?24

It has been suggested that the discovery of at least three neutrino types, which can and will change flavor during their trip from the suns core to the earth, has resolved the problem of the disconnect between the sun’s total power output and the number of neutrinos observed in deep mine experiments.25 The literature is a bit confusing. Correction after correction after correction, complications galore.

But could it be that the extremely hot solar corona is responsible for a significant fraction of the sun’s radiated energy? If yes, then Hydrino production is a significant barrier to global cooling…

What of the gravitational impact of dark matter/Hydrino? Are Hydrino production rates high enough for most of the matter in galaxies to be Hydrino? Or, is it possible that the early universe was initially primarily Hydrino? Given the stability of Hydrino, might it be possible very little of the Hydrino initially present in the universe ever converted to something else? For the present, this author will leave those questions to be considered by others…

Concluding Remarks

All tests agree: there is nothing in the broad sweep of astrophysical data that is contrary to the hypothesis that dark matter is Hydrino. It remains a valid theory, arguably the only valid theory left of the field of dead or dying dark matter candidates. It is one of the few theories that is consistent at all sizes and all systems. For example, unlike the WIMP model and other models, the GUTCP DM/Hy model is a natural outcome of an overall theory. GUTCP physics connects remarkably well at all scales. Furthermore, the Hydrino hypothesis can be (and has been) easily tested in myriad ways.

And culturally speaking: It can be argued, using the above description of the data-free preferred models for dark matter, that the scientific process can be ignored if enough money and enough reputations are in jeopardy from seeking the truth.

And remember to consider: which side of the looking glass is the Hydrino Hypothesis on, Real or Bizzarro? One model suggests dark matter is simply a form of hydrogen, and the other dark matter is Unobtanium: non-baryonic, non-interacting WIMPs.

Personal Notes

The following correspondence began when I was able to see an early version of a paper on a topic not covered in this monograph, molecular Hydrino. In the subsequent correspondence it is revealed that at least some scientists can be persuaded that truth can be found outside the standard paradigm. The paper title and abstract:

From: Jonathan Phillips

Sent: Tuesday February 16, 2021 4:23 AM

To: Fred Hagen

Subject: Classical Quantum Mechanics

Sir-

Great Article with R.L. Mills. Hopefully, it will force some shift in the attitude of the physics community. In particular, I appreciate the citation of one of my many experimental studies that was designed, but failed, to debunk CQM [Classical Quantum Mechancs is synonymous with GUTCP].

I've attached a couple of articles I wrote on CQM theory. The original impetus for writing these articles was to debunk CQM. I discovered, while reviewing the chapter on Helium in the Mills “Theory of Everything” that CQM theory predicts the two electrons in Helium to be of different sizes and energies. I believed, at the time, there was irrefutable data supporting the Pauli Exclusion Principle (PEP) and therefore reasoned that the failure of CQM to be consistent with this well documented Principle was an effective debunk to the entire CQM theory. I began, to RLM’s horror, to write an article showing the CQM theory does not work....Well, something odd/totally unexpected happened during my research: I discovered there is zero experimental evidence consistent with the PEP. Not a shred of spectroscopy. The emperor has no clothes.

In contrast, the predictions of the CQM model precisely agree with experiment. Helium has two electrons in the “ground state,” one at -24.6 eV and one at -54.4 eV. This agrees with ALL spectroscopy. Once again, the data, spectroscopy in this case, fails to debunk CQM. So, I think you, as an authority in spectroscopy, may find this particular set of articles to be interesting??

I hope you will read the attached and agree. Let me know.

Unfortunately, this is not the kind of work the major journals will even consider considering. Physics Essays is the best apolitical/science-based alternative. It is a miracle it exists.

V/R

Jonathan Phillips

From: Fred Hagen

Sent: Thursday, February 18, 2021 7:37 AM

To: Jonathan Phillips

Subject: Re: Classical Quantum Mechanics

Dear Jonathan,

Thank you very much for you e-mail. It looks like we share a common experience with CQM, although yours preceded mine by one and a half decades. When I was asked more than a year ago to have a look at some of Randy Mills’ hydrino-containing samples I was ignorant of the word, let alone the concept of, hydrino. And yes, why should a biochemist worry about theoretical discussions going on in hard-core physics. Well, perhaps one good reason would be that when I was first taught QM as a freshman in chemistry (1970) I felt very unhappy about apparently not being able to understand the theory. I “resolved” matters by adopting what you call DQM; in other words I learned the tricks of doing approximate calculations for spectroscopy, although in the back of my mind there was always this nagging feeling that there is something rotten in the state of Denmark. Enter the hydrino samples. I did a quick round of reading and I concluded that this must all be quackery, and that it shouldn’t be too much of a spectroscopic effort to disprove the hydrino claim. Then I started to measure and in the course of a year of so I found myself to gradually make a 180 degrees turn. In this respect covid-19 had a rare positive effect: the lab was essentially deserted, and so I could do multi-day-long runs (unusual in EPR spectroscopy) without interference from others. The end result is the posted article, which, at the time of writing, we have not yet been able to get accepted by an “established” journal.

I read your articles with great interest. I know this sounds like the standard first sentence of an editor’s rejection letter, so just as a factual proof (and no pedantry intended) I found the following typo’s: in the 2007 paper on p 569 SQM à DQM, and on p 586 a sign is missing between the first and second term in equation 35; in the 2014 paper line 944: will is àis; and line 1003: ground à ground state. I am sorry that at the moment I do not have more substantial comments to offer other than to say that the conclusions you draw appear to me to be very reasonable and justified. Of course the problem with Physics Essays is that the physics academic establishment can (and will) always say that publications with impact factors well below unity also have relevance well below unity. That is why I try to get our work into an “established” journal. We have to see how long/far my stamina endures …

Being a biochemist I consider my possibilities to make definitive judgements on CQM rather limited. I tried to get this message across in our paper: what I, as an independent observer, measured is largely consistent with a particular part of CQM; alternative interpretations are quite unlikely. This should be a trigger for others to get to it, to go into their labs and try as hard as they can to disprove CQM, and thereby possibly increasing its support. We’ll see, we’ll see.

Best wishes,

Fred Hagen

From: Jonathan Phillips

Sent: Thursday, February 18, 2021 11:25 AM

To: Fred Hagen

Subject: Re: Classical Quantum Mechanics

Hi Fred-

Thank you!

I hope to continue this dialogue. Yes? Here is a topic: science theory!

I believe proper science includes these components: 1. If a theory doesn't agree with data, that theory is no longer valid. 2. A scientific theory must be such that it can be validated/invalidated by laboratory experiment. That is, it must include “predictions” regarding observable data that can be compared with existing data (e.g. spectra of helium, line broadening in stars) or can be tested with novel experimental work, e.g your spectroscopic studies. 3. Theory should NOT contradict well demonstrated “laws” such as those Newton and Maxwell developed. 4.Expertise, prominence, etc. are not part of the scientific process, beyond critiquing the experimental methods. In my mind, CQM has survived all tests and attacks. In contrast, SQM fails 1, 2, 3, and is only supported by anti-4. One argument not made in my papers: SQM applied to helium is a “curve fit.” There are NO predictions. In all the models of helium, one varies a minimum of two parameters to match measured data. Not impressive, and not science as one cannot “test predictions” because there are none. In contrast, there are no variable parameters in the CQM model of helium. It is totally predictive, and supported by spectroscopy.

In conclusion, I believe that “cancel culture” became part of quantum (science in general?) long ago. That is why “anti-4” is the ONLY part of quantum discussion at present.

And, I am not against “metaphysics” properly labelled. Metaphysics is theory that is possibly plausible, but untestable- hence not “physics.” String Theory? The attached paper???

Best

Jonathan

P.S.-Regarding the corrections offered: I send you the only version I have available which is the author proof. Final version may include the corrections you suggest, don't know! As I am no longer associated with any institution- except my own company- and not a subscriber to Physics Essays....

From: Fred Hagen

Sent: Saturday, February 20, 2021 10:54 AM

To: Jonathan Phillips

Subject: Re: Classical Quantum Mechanics

Hi Jonathan,

When I entered the University of Amsterdam for the first time now more than half a century ago I still remember that I had this strong urge to learn what “the scientific method” is all about, that is, to learn how to do science (contrary to, for example, the 1000 pages of organic chemistry reactions they were trying to feed me as facts to be stored in my brain). I went to the scientific book shop where I found this thing called “The logic of scientific discovery” by a guy named Karl Popper. It was no easy read in English, but I worked my way through the first part until I came to chapter 4 which was about falsifiability. That was the eye opener, or Aha Erlebnis as the Germans say, that I was looking for. It was all that simple: the scientific method is casting a theory not inconsistent with basic knowledge and then trying as hard as one can to disprove its predictions in experiments. And every time an attempt to falsify fails the theory gains in status. Until it eventually meets the killer experiment, and then the theory has to be replaced with a better theory. In other words, proving a theory is impossible, only disproving is possible. I actually wondered at the time why Popper had to write a book of nearly 500 pages where its brilliant essence easily fits on half an A4. A decade later, when I graduated in biochemistry, I got the same theory in a different form from my supervisor who offered me his “25-experiments rule”: when you do an experiment to bring some fact to light in biochemistry, you morally take it upon you to spend the next weeks, or months if necessary, to do 24 control experiments in which you try to prove your first experiment to be wrong. I think your first 3 components of proper science are another manifestation of that same principle, which Popper actually wrote down, and published, originally in German for the first time in 1935. In other words, Heisenberg and Schrödinger, and all the others in QM at that time and in the 85 years to follow were familiar with it, and so your component 4 is indeed not so much about science as about human psychology, chapter moral shortcomings.